Has this ever happened to you? You’re in a long discussion with a chatbot, giving it instructions, context, and examples… and then, it suddenly “forgets” all the details from the beginning of the chat. It starts asking strange questions and acts as if it’s meeting you for the first time.

The AI isn’t ignoring you, nor is it in a “bad mood.” The culprit is something called the context window.

Simply put, a context window is the amount of information that a neural network can “keep in mind” at any given moment.

To make it clear, imagine you’re talking to a friend with a very short-term memory. They can perfectly recall the last 5-10 sentences of your conversation, but they completely forget everything that was said before that. This “memory limit” is their context window. They use everything inside it to form a reply, but anything outside of it is lost.

For example, you type: “Recommend a good movie about space.” It suggests “Interstellar.” Then you ask, “Who directed it?” The bot understands that “it” refers to “Interstellar” because your first question is still inside its context window.

How Big is This “Memory”?

The size of the context is measured in tokens. They aren’t exactly words, but a good rule of thumb for English is that 1 token ≈ 0.75 words.

❗️An important nuance: This ratio can change for other languages. Languages with more complex grammar or characters often use more tokens to express the same idea, filling up the AI’s “memory” faster.

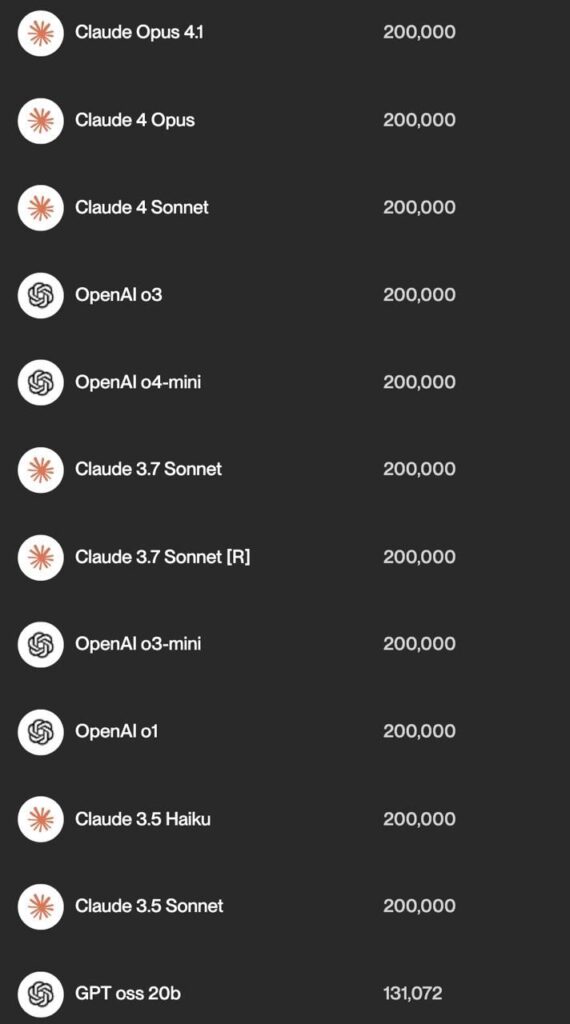

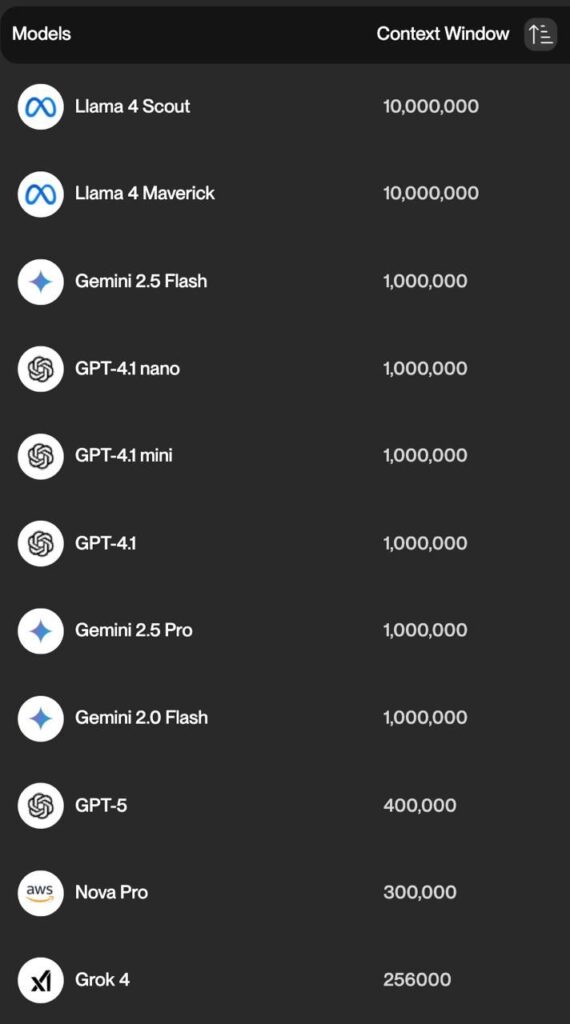

To help you visualize these volumes, I’ve attached a handy chart that compares the context windows of top AI models to the length of famous books. Also you can check top models context windows.

What Else Is Important to Know? (Pro-Tips)

* Stated vs. Practical Limits: Even with a huge advertised window, a model’s performance and quality can decrease as you get close to the maximum limit. It’s always a good idea to leave a buffer.

* API > Apps: The context window available to developers via a paid API is often much larger than what’s available in the free, public-facing chat application.

So, the next time an AI “forgets” the start of your dialogue, you’ll know that its “short-term memory” simply filled up.

Have you encountered this “forgetfulness” with neural networks? In which tasks did it cause the most trouble for you? Share your experience in the submit a story!